Blender HDR and the reference white issue

The latest alpha of the upcoming Blender 5.0 release comes with High Dynamic Range (HDR) support for Linux on Wayland which will, if everything works out, make it into the final Blender 5.0 release on October 1, 2025. The post on the developer forum comes with instructions on how to enable the experimental support and how to test it.

If you are using Fedora Workstation 42, which ships GNOME version 48, everything is already included to run Blender with HDR. All that is required is an HDR compatible display and graphics driver, and turning on HDR in the Display Settings.

It’s been a lot of personal blood, sweat and tears, paid for by Red Hat across the Linux graphics stack for the last few years to enable applications like Blender to add HDR support. From kernel work, like helping to get the HDR mode working on Intel laptops, and improving the Colorspace and HDR_OUTPUT_METADATA KMS properties, to creating a new library for EDID and DisplayID parsing, and helping with wiring things up in Vulkan.

I designed the active color management paradigm for Wayland compositors, figured out how to properly support HDR, created two wayland protocols to let clients and compositors communicate the necessary information for active color management, and created documentation around all things color in FOSS graphics. This would have also been impossible without Pekka Paalanen from Collabora and all the other people I can’t possibly list exhaustively.

For GNOME I implemented the new API design in mutter (the GNOME Shell compositor), and helped my colleagues to support HDR in GTK.

Now that everything is shipping, applications are starting to make use of the new functionality. To see why Blender targeted Linux on Wayland, we will dig a bit into some of the details of HDR!

HDR, Vulkan and the reference white level

Blender’s HDR implementation relies on Vulkan’s VkColorSpaceKHR, which allows applications to specify the color space of their swap chain, enabling proper HDR rendering pipeline integration. The key color space in question is VK_COLOR_SPACE_HDR10_ST2084_EXT, which corresponds to the HDR10 standard using the ST.2084 (PQ) transfer function.

However, there’s a critical challenge with this Vulkan color space definition: it has an undefined reference white level.

Reference white indicates the luminance or a signal level at which a diffuse white object (such as a sheet of paper, or the white parts of a UI) appears in an image. If images with different reference white levels end up at different signal levels in a composited image, the result is that “white” in one of the images is still being perceived as white, while the “white” from the other image is now being perceived as gray. If you ever scrolled through Instagram on an iPhone or played an HDR game on Windows, you will probably have noticed this effect.

The solution to this issue is called anchoring. The reference white level of all images needs to be normalized in order for “white” ending up on the same signal level in the composited image.

Another issue with the reference white level specific to PQ is the prevalent myth, that the absolute luminance of a PQ signal must be replicated on the actual display a user is viewing the content at. PQ is a bit of a weird transfer characteristic because any given signal level corresponds to an absolute luminance with the unit cd/m² (also known as nit). However, the absolute luminance is only meaningful for the reference viewing environment! If an image is being viewed in the reference viewing environment of ITU-R BT.2100, (essentially a dark room) and the image signal of 203 nits is being shown at 203 nits on the display, it makes the image appear as the artist intended. The same is not true when the same image is being viewed on a phone with the summer sun blasting on the screen from behind.

PQ is no different from other transfer characteristics in that the reference white level needs to be anchored, and that the anchoring point does not have to correspond to the luminance values that the image encodes.

Coming back to the Vulkan color space VK_COLOR_SPACE_HDR10_ST2084_EXT definition: “HDR10 (BT2020) color space, encoded according to SMPTE ST2084 Perceptual Quantizer (PQ) specification”. Neither ITU-R BT.2020 (primary chromaticity) nor ST.2084 (transfer characteristics), nor the closely related ITU-R BT.2100 define the reference white level. In practice, the reference level of 203 cd/m² from ITU-R BT.2408 (“Suggested guidance for operational practices in high dynamic range television production”) is used. Notable, this is however not specified in the Vulkan definition of VK_COLOR_SPACE_HDR10_ST2084_EXT.

The consequences of this? On almost all platforms, VK_COLOR_SPACE_HDR10_ST2084_EXT implicitly means that the image the application submits to the presentation engine (what we call the compositor in the Linux world) is assumed to have a reference white level of 203 cd/m², and the presentation engine adjusts the signal in such a way that the reference white level of the composited image ends up at a signal value that is appropriate for the actual viewing environment of the user. On GNOME, the way to control this currently is the “HDR Brightness” slider in the Display Settings, but will become the regular screen brightness slider in the Quick Settings menu.

On Windows, the misunderstanding that a PQ signal value must be replicated one to one on the actual display has been immortalized in the APIs. It was only until support for HDR was added to laptops that this decision was revisited, but changing the previous APIs was already impossible at this point. Their solution was exposing the reference white level in the Win32 API and tasking applications to continuously query the level and adjust the image to match the new level. Few applications actually do this, with most games providing a built-in slider instead.

The reference white level of VK_COLOR_SPACE_HDR10_ST2084_EXT on Windows is essentially a continuously changing value that needs to be queried from Windows APIs outside of Vulkan.

This has two implications:

- It is impossible to write a cross-platform HDR application in Vulkan (if Windows is one of the targets)

- On Wayland, a “standard” HDR signal can just be passed on to the compositor, while on Windows, more work is required

While the cross-platform issue is solvable, and something we’re working on, the way Windows works also means that the cross-platform API might become harder to use because we cannot change the underlying Windows mechanisms.

No Windows Support

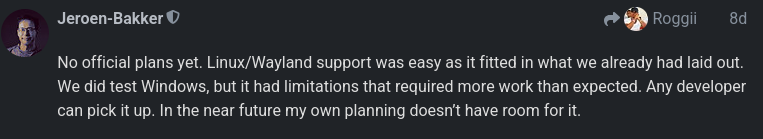

The result is that Blender currently does not support HDR on Windows.

Jeroen-Bakker explaining the lack of Windows support

The design of the Wayland color-management protocol, and the resulting active color-management paradigm of Wayland compositors was a good choice, making it easy for developers to do the right thing, while also giving them more control if they so chose.

Looking forward

We have managed to transition the compositor model from a dumb blitter to a component which takes an active part in color management, we have image viewers and video players with HDR support and now we have tools for producing HDR content! While it is extremely exciting for me that we have managed to do this properly, we also have a lot of work ahead of us, some of which I will hopefully tell you about in a future blog post!

-

Best Practices for Ownership in GLib

-

Improving the Flatpak Graphics Drivers Situation

-

Flatpak Pre-Installation Approaches

-

Flatpak Happenings

-

SO_PEERPIDFD Gets More Useful

-

XDG Intents Updates

-

Integrating libdex with GDBus

-

Testing with Portals

-

Display Next Hackfest 2025

-

GNOME 49 Backlight Changes

-

Blender HDR and the reference white issue

-

Booting into Toolbox Containers

-

On the Usefulness of SO_PEERPIDFD

-

Fedora Silverblue Development Utils

-

Developing Gnome Shell on Fedora Silverblue

-

Setting up a personal server in 2023

Do you have a comment?

Toot at me on mastodon or send me a mail!